Data map API

This page is under review

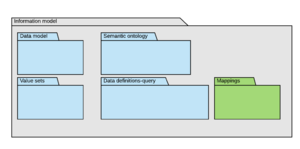

The mappings package is one of the 5 main component categories in the information model.

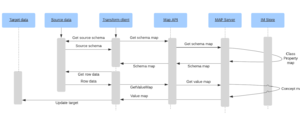

The data map APIs are a set of APIs provided by the information model server to aid with the transfer of data between two data sets, where the source and destination data sets conform to a data model. Either the source or the destination data models (or both) may be implemented as either relational or graph or both.

The APIs cover two main types of activities:

- The retrieval of mapping files for use by a transform module client set in the context of a known source type and destination type.

- The mapping of values between source and destination concepts, terms or codes, set in the context of known source properties and destination properties obtained from the mapping files generated as above.

Use cases

The use of a mapping server enables the separation of concern between the maintenance of data maps and the application that transforms data.

The mapping server is used by a transform module operating as a client.

It is designed to be used as an assistance to a code based ORM like transform, and can thus be used for some or all of the process, depending on the requirements of the transform client. In other words, the use of the APIs is a pick and mix approach, making it possible to introduce it into currently operating transformations without disruption.

The map operates as a map with a navigator issuing a set of instructions to the client, but keeps the control of the process within the code space of the transformation module.

There are 3 main uses of the get map API:

- Relational to object mapping (MR2O) For a particular source system, obtain a map for each table and field in the source, and any source RDB retrieval logic, obtain the classes and properties of the target and any transformation logic required in between.

- Object to relational mapping (MO2R). For a particular source object store, obtain a map for each class and property in the source, and any object manipulation logic, obtain the tables and fields of the target and any RDB update logic required.

- Object to Object mapping (MO2O). For a particular source object store, obtain a map for each class and property in the source, and any object manipulation logic, obtain the classes and properties of the target and any object manipulation logic required.

It should be noted that these are conceptually the same at an abstract level and vary only because of terminology. The use of different terminology helps in order to make the mapping logic clearer. For example a table join operation is logically the same as a graph traversal and a dependent table row generation is logically the same as an inverse relationship to an object creation.

There is one main use of the Get mapped value API

- For a codeable value in the source (e.g. a code or text), when taken together with the context of that value, obtain the common information model target concept (or code).

Mapping document

A mapping document is an information model document subtype returned by the Mapping server on request from a transform client via the mapping REST API

{

"parameter": [

{

"name": "sourceContext",

"value":{

"provider": "BartsNHSTrust",

"system": "CernerMillenium",

"Schema": "CDS-APC"},

{

"name": "targetContext",

"value":{

"system": "DiscoveryCore",

"schema": "DiscoveryInformationModel/V1"} ]}

The mapping document itself contains a source and target context object as well as the relevant maps.

The document contains a map between a source and a target, the source being established by a set of properties that identify the nature of the source schema or ontology domain, and the destination likewise established.

The C2C mapping process assumes the interposition of the data model between the source and the destination. In other words the mapping "passes through" the information model. In practice this means that the common data model is mapped only once to the target schema and each source need only be mapped to the information model's data model.

The object value mapping process involves a one, two or three step mapping between a source code or text and a target concept. The first step is optional and is only used when a source code can be first mapped to a known classification code, and the second step takes the mapping to create a concept. A further map occurs when the target itself is a legacy concept i.e. a non core concept, and the core concept is also generated.

Context dependency

For a source value to be understood, it is necessary to provide some context to it. All values are therefore set in some form of context. As a minimum this context would be the source property i.e. the property for which the value is a value of. In many cases though, the context will be much more extensive.

For example, in a source system, the word 'negative, a value of the field 'result text' associated with a code value '12345', a value in the field 'test', which is a field of the table of 'clinical events', used by the system 'Cerner Millennium', in the hospital 'Barts NHS trust', may mean something completely different to 'negative' with the code '12345' set in another hospital, even with the same system.

A further layer of context includes the Domain in which the mapping takes place. A map generated for one purpose may be different when generated for another purpose.

For example, when processing published data into Discovery, the domain in question could be described as the 'inbound publisher mapping domain'.

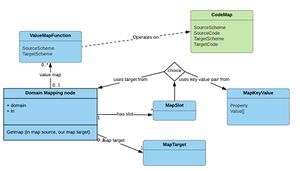

Consequently, the implicit idea of a context and a domain, is explicitly modelled as a 'Domain mapping node' object. The class, and the various supporting classes are described in the following sections.

Mapping Convergence

When processing data along the pipeline, mapping can be seen as a set of interconnected nodes, each node being triggered by the passing in of one or source objects, or the outputs from other nodes, finally resulting in the output of a target object for use by the calling application.

Mapping convergence is the means by which there is an attempt to rationalise the huge number of source types to a fewer number in order to make map authoring simpler and more efficient.

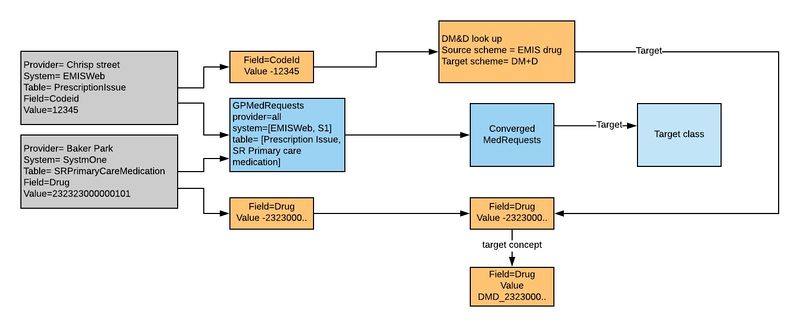

For example, let us say that we are trying to map a drug code from an EMIS drug issue to the common model. We understand that the code comes from Chrisp Street Health Centre, which uses EMIS Web, and the source table in question is the Prescribing issue table, with field of Codeid, and the code value is '12345'.

Likewise, we are also trying to map a drug code from a TPP practice source. We understand that the code comes from the Parkdale medical centre, that they use TPP SystmOne, that the source table in question is the PrimaryCareMedication table , the field is code and the id is 232000001108.

We also know that EMIS provides a look up table between their code and DM&D, but SystmOne provides the DM&D code itself.

The first thing we recognise is that the context of the above two sources appears to be nearly equivalent. Both are going to end up in the same target class and both will end up with the same target value when mapped from DM&D. There is a variation in EMIS in that before getting to exactly the same context, there is a prior step to perform, the map between EMIS 'X' and the DM+D 'Y'. However, if that mapping were to occur first, then the two context's would be exactly equivalent.

It appears that there is some form of convergence from two sources. This can be illustrated in the following way:

Convergence can occur in both class mapping (blue

Mapping node chain

When the mapping API is called, the mapping service looks for a mapping node to trigger, the node being the one that accepts the source properties sent in by the client application as inputs. As each node is triggered, maps occur until such time as there are no further nodes to trigger and the output has been reached.

When authoring a map, the map author chains together a series of actions and calls the relevant nodes with the relevant source data properties (or simply passes on the results of a previously triggered node). Thus a chain is built up.

Because of the convergence concepts, it is likely that the same node will be used in many different mapping chains, and it is equally likely that the same chains will be used by different source systems. It is possible to configure maps that ignore particular source identifiers. For example to process an EMIS observation entry, the provider id would be ignored as the only relevant context is the type of system. The provider property would be set to ALL in the mapping configuration.

Mapping node class

'Domain mapping node' sums up the idea that a number of different source structures can in the end converge and map to a single target structure, by dint of the common domain in which the map occurs, and the common context in which a particular set of sources share.

The primary object is the 'Domain mapping node' which has a uniquely identified context to which a number of sources map to. Each different source is referred to as a 'Input slots' and all slots must be filled for the map node to be triggered.

A mapping node is authored over time, perhaps initially by selecting a single source, but then recognising that additional sources are able to converge.

In the above example, the author has decided that there us similarities between the EMIS and TPP prescription tables. A node can be created with a set of properties that indicate a convergence sufficiently to know that the output is class and property. For example:

An example mapping node configuration that deals with some inbound medication

{ "MapNode": [

{"IRI": ":MN_GPMedRequests",

"Domain": ":InboundPublisherMaps",

"Description": "Common convergence for source GP medication issues",

"MapSlot": [

{

"Property": "Source_Provider"}, //No Value therefore any provider will do

{

"Property": "System",

"Value": [ "EMISWeb","SystmOne"]},

{

"Property": "Table",

"Value": ["PrescriptionIssue", "SRPrimaryCareMedication"]}],

"AddOutput": {

"Class": ":DM_MedicationRequest",

"Property": ":DM_requestedMedication" } } ],

We see here that if any provider, using EMIS Web or SystmOne as their source, if the source table is either Prescription issue, or SRPrimaryCareMedication then we are going to end up eventually with the same context and a class of medication request and a property of requested medication.

We have yet to deal with the remaining EMIS value problem. To do that we create another node to deal with the EMIS variation, which only requires the Codeid and the value as input slots.

This node calls a mapping function with the three parameters one of which is the property value of the input property

A simple mapping node with a property code look up

{ "IRI": ":MN_EMISCodeIdLookUp",

"Domain": ":InboundPublisherMaps",

"Description": "Look up for EMIS code id",

"Input": {

"Property": "CodeId"},

"MapFunction": {

"Name": "CodeMap",

"Parameter": [{

"Fixed": "EMISCodeId"},

{

"PropertyValue": "CodeId"},

{

"Fixed": "DMD"}] },

"AddOutput": {

"Property": "Drug",

"FunctionResult": "true"} } ]}

We are now ready to converge the two outputs into the final node, which only has to do the final map.

The final mapping node in the chain performing a concept look up

{ "MapNode": [

{

"IRI": ":MN_IMMedicationrRequestEMISTPPDrugMap",

"Domain": ":InboundPublisherMaps",

"Description": "Convergence nodes for medication requests from EMIS and TPP",

"Input" :[

{"MapNode" : ":MN_GPMedRequests"},

{"Property": "Drug"}],

"MapFunction" : {

"Name":"ConceptMapper"},

"AddOutput": {

"Add":{"FunctionValue": true } } ]}

Cumulatively, the result of the map contains the target class, property and value as well as the context node identifiers that generated it.

The final target output to the client being the map result set

{

"MapResultSet": {

"MappingShortcut": {

"ShortCutNode": ":GPMedRequests",

"RequiredProperty": ["CodeId"]},

"Class": ":DM_MedicationRequest",

"Property": ":DM_RequestedMedication",

"Value": ":SN_123300212120"

}

}

Of significance is the 'Mappint short cut' produced as part of the result. This is a performance enhancing value that can be used by the client in the next API call to speed up the response. If the client is confident that the class and property are going to be the same (as a result of being the same source table and field then only the property name and value need be submitted.

Furthermore, it can be seen from the logical 2 step mappings that it is equally practical for clients to consider a direct map from source to destination knowing that it has mapped to the common model as part of the process. This contrasts this style of mapping to conventional integration mappings that map from many to many directly. In other words by mapping in two stages we get a series of one to one maps which appear to be one to many

To more easily demonstrate how mappings work, there is a working example showing a walk through of the use of the mapping API using the resource examples illustrated below

Target DB schema resources

Before doing any mappings, it is necessary to model a target schema in order to map to it.

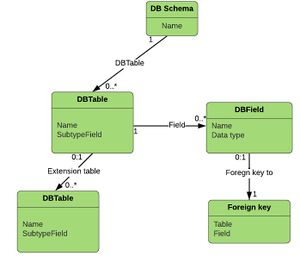

Implementation schema resources are a set of objects of the class DBSchema (to the right)

The class is designed as a simple entity relationship class with 2 additional properties:

- The name of the table's extension tables. These are optional triple tables designed so that a schema can continue to extend to additional properties and values using the information model to determine the properties and data types. This avoids the need to continually change the relational schema with new data items.

- The name of the field holding the subtype indicator. This is described as the entity subtype attribute.

The following is an example of a snippet from an encounter table:

A schema table example showing extension table and subtype field

{"DBSchema": {

"DBSchemaName": "Compass_version_1",

"DBTable": {

"DBTableName": "encounter",

"DBExtensionTable": {

"DBTableName": "encounter_extension"

},

"DBSubTypeField": "type" } } }

The encounter table is expecting subtypes to be authored and therefore has a "subtype" field authored in the table in order to avoid generating many subtype tables.

Original source Resources

Every map has a source and target. From the perspective of the information model an 'original source' represents a data model created from a publisher's source data i.e. is likely to be a relational or json representation of publisher data that might have been originally delivered as HL7 V2, XML, JSON, CSV or pipe delimited flat files. Source resources are therefore not representations of the actual data, but representations of a model that would be used when transforming to the common model. An example of this is a staging table.

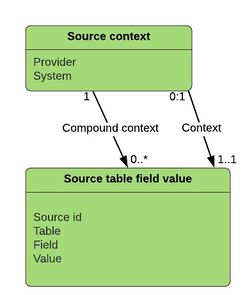

It is assumed that a source may contain many tables, each with many fields, each with many values including text. It is not necessary for there to be actual tables, and fields and any object structure, masquerading as such, can be used. The terms 'table' and 'field' are used for convenience and refer to objects and properties just as well.

There many be differences between one provider and another using the same system, and different versions of the system. Thus there is a need to provide context for each source resource.

Each element of source data must explicitly inherit the context so that the mapping API can recognise the context with each request.

The Original source resource object reflects a single logical thing to map. In most cases this will be a single field and single value. However, in some cases (such as free text sources), the source is derived from a list of fields, each with certain values.

For example, a piece of text saying "negative", when contextualised as a result against a test for 'Hepatitis B surface antigen' would use compound context consisting of the table, the test field, the test code, the result field and the result text of negative.

An example original source object from a CDS admitted patient care record with a value of '1' for the admitted patient classification

{ "OriginalSource": {

"Provider": "Barts",

"System": "CernerMillenium",

"Context": {

"id": 1,

"Table": "APC",

"Field": "PATIENT_CLASSIFICATION_CODE",

"Value": 1 } }

The original source resource would be used as part of a mapping request submitted via the API

Information model target resource

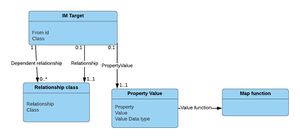

The common information model target resource is the target of a map from an original source.

The resource is delivered as part of the IM mapping API response.

The target resource indicates the class and properties of the target object, the object may need creating or adding to with the target property, and the target value.

In some cases multiple sources may link to one target and in other cases multiple targets may link to one source, the link item being the "from id" link fro the target.

An example target resource from a request containing an original source object

{"IMTarget": {

"Fromid": 1,

"Class": ":CM_HospitalInpAdmitEncounter",

"DependentRelationship": {

"Relationship": ":RM_isComponentOf",

"Class": ":DM_HospitalInpEntry"

},

"PropertyValue": {

"Property": ":DM_admissionPatientClassification",

"Value": ":CM_AdmClassOrdinary" } }}

The class, properties and values of the IM resource all reference IM concepts.

This resource says that the target IM class is a "hospital inpatient admission encounter type". This object is dependent on the presence of a container encounter, in this case of type "hospital in patient stay" and the relationship between them is 'is subcomponent of' . The field value source results in the property of 'admission patient classification; and the value being 'Ordinary admission'.

Note that the information model resource uses subtypes as classes, in line with the ontology. It avoids the complexity involved in populating database schemas. However, the DB target resource does include the specific instructions as to how to populate the types.

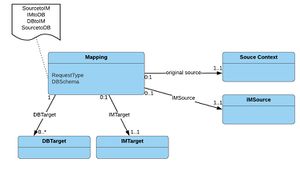

Map Request

For a map to be provided it needs to be requested and it is the job of the IM mapping API to respond to a request.

A mapping resource is used both as a request and a reference i.e. can be exported as a set of maps or mapped on request.

Note that as the mapping API is designed to be used as individual requests for individual values, this class does not inherit properties or classes in the source or target. A typical map request using the above example could be:

An example request from a source system to a DB target

{"Mapping":[ {

"Request": "SourcetoDB",

"OriginalSource": {

"Provider": "Barts",

"System": "CernerMillenium",

"Context": {

"id": 1,

"Table": "AdmittedPatientCare",

"Field": "PatientClassificationCode",

"Value": 1

}

},

"TargetDBSchema": "Compass_version1" } ] }

Bringing it all together

A working example of the above is illustrated as a mapping working example for a client wishing to go from a source to a database target

{

"MapResultSet": {

"Result": [

{

"Class": ":DM_MedicationRequest"},

{

"Property": ":DM_RequestedMedication" },

{

"ContextNode": ":GPMedRequests" },

{

"Value": ":SN_123300212120" } ]}}